How to use Ollama as backend for Smart Components

August 08, 2024 by Anuraj

Docker AI dotnet

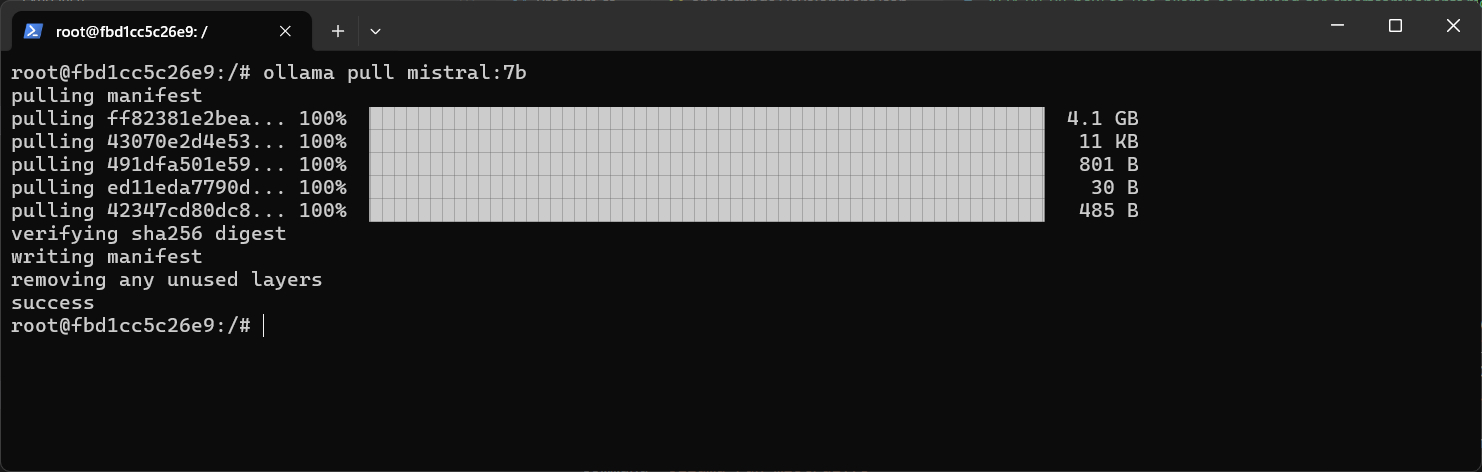

Few weeks back I wrote a blog post on Smart Components - a few components which helps .NET developers to enable infuse AI into their applications with zero or less code. By default we need an Open AI endpoint and API Key. But we can do it with Ollama. To use Ollama, make sure you’re running Ollama - I am using Ollama in Docker with the command - docker run -d -v D:\OllamaModels:/root/.ollama -p 11434:11434 --name ollama ollama/ollama. Next we need to run one model, I am using mistral. To download and run this, we first need to open shell in the running container with the command - docker exec -it ollama bash - which will open bash shell in the ollama container. Next we can run the command ollama pull mistral:7b - this command will download mistral:7b model to the local machine. This may take some time. Here is the screenshot.

Next in the application configuration instead of Open AI configuration, use the following configuration.

"SmartComponents": {

"SelfHosted": true,

"DeploymentName": "mistral:7b",

"Endpoint": "http://localhost:11434/"

}There is no code change require other than this. It will work properly.

Here are few recommendations on self hosted the models - Quality and speed varies dramatically from one model to another. For best results, use GPT 3.5 Turbo or better - this will respond quickly and at good quality.

Based on experimentation:

- GPT 3.5 Turbo (hosted service) produces good quality results and is fast.

- Mistral 7B produces good output for Smart TextArea, but is inconsistent for Smart Paste (filling some forms, but leaving others blank or introducing strange characters). Output speed is good for a local model, but on most workstations is still way slower than a hosted service.

- Llama2 7B output quality is insufficient (with Smart Paste, it puts strange or hallucinated text into form fields, and with Smart TextArea, it writes generic text that doesn’t well account for the configured phrases).

- Mixtral 47B produced good output for Smart TextArea, but wouldn’t follow the instructions properly for Smart Paste and hence left forms blank. Additionally it’s too big to run on most workstation GPUs so can be impractically slow.

From GitHub

This way we can use Ollama as the backend for Smart Components.

Happy Programming

Found this useful? Share it with your network!

Copyright © 2026 Anuraj. Blog content licensed under the Creative Commons CC BY 2.5 | Unless otherwise stated or granted, code samples licensed under the MIT license. This is a personal blog. The opinions expressed here represent my own and not those of my employer. Powered by Jekyll. Hosted with ❤ by GitHub