Running DeepSeek-R1 locally for free

January 28, 2025 by Anuraj

dotnet AI DeepSeek

In this blog post, we’ll learn how to run DeepSeek-R1 locally for free. DeepSeek R1 is a new large language model. DeepSeek R1 is explicitly designed as a “reasoning model”. It’s remarkable to say that reasoning model is going to drive key advancement in large model progress in 2025.

To run DeepSeek-R1 locally, first we need to install Ollama. We can download it from https://ollama.com

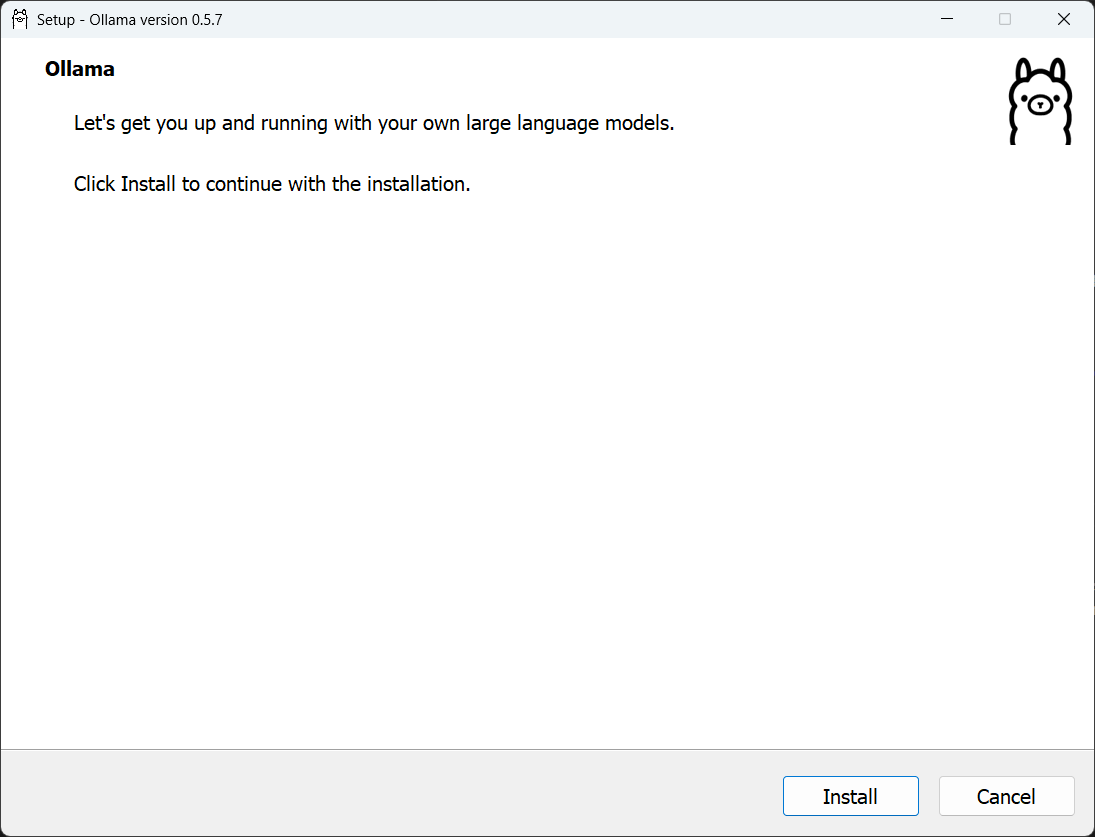

Once the installation file downloaded, execute it, it will show an installation dialog like this.

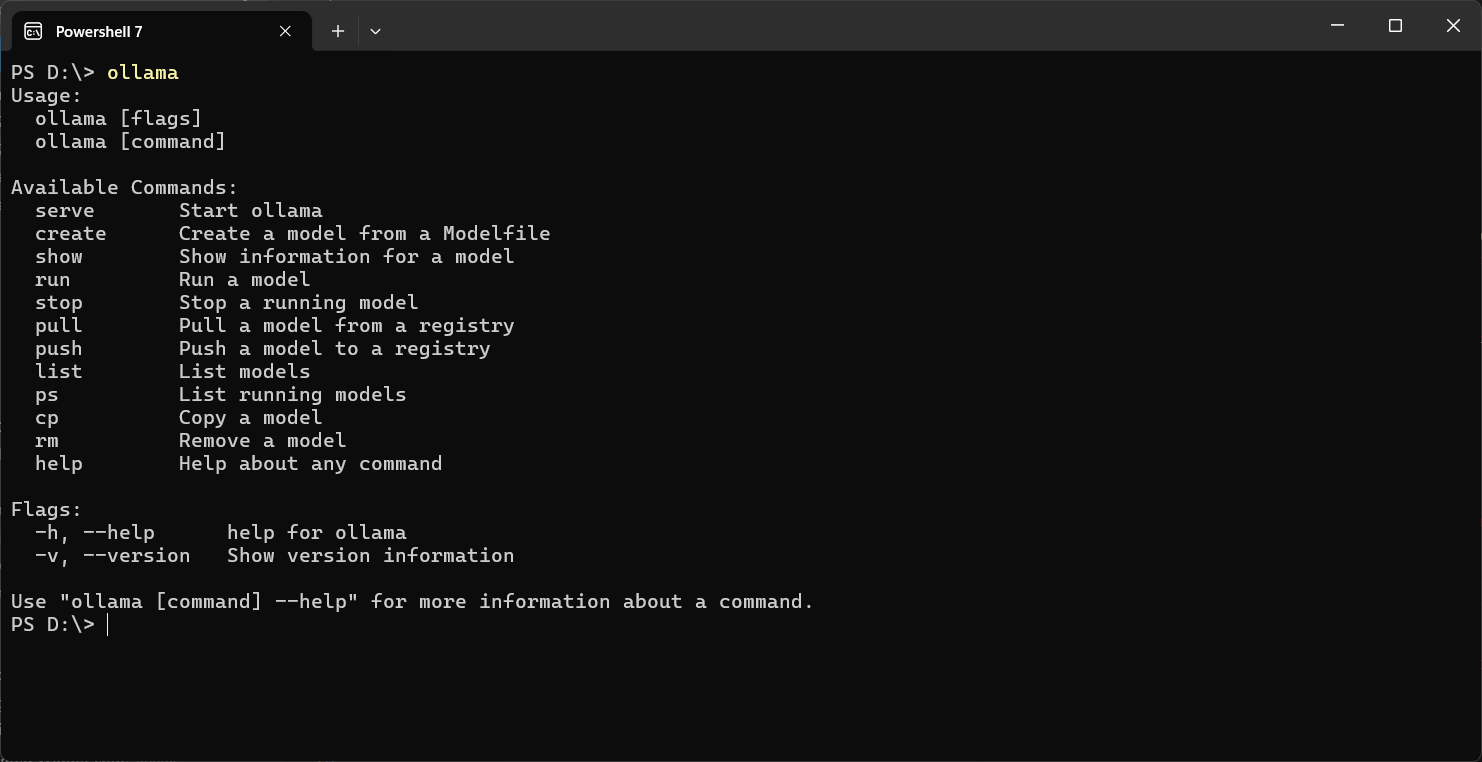

It is simple and straight forward installation. Once the installation is completed, we can run the ollama app by running ollama command in terminal.

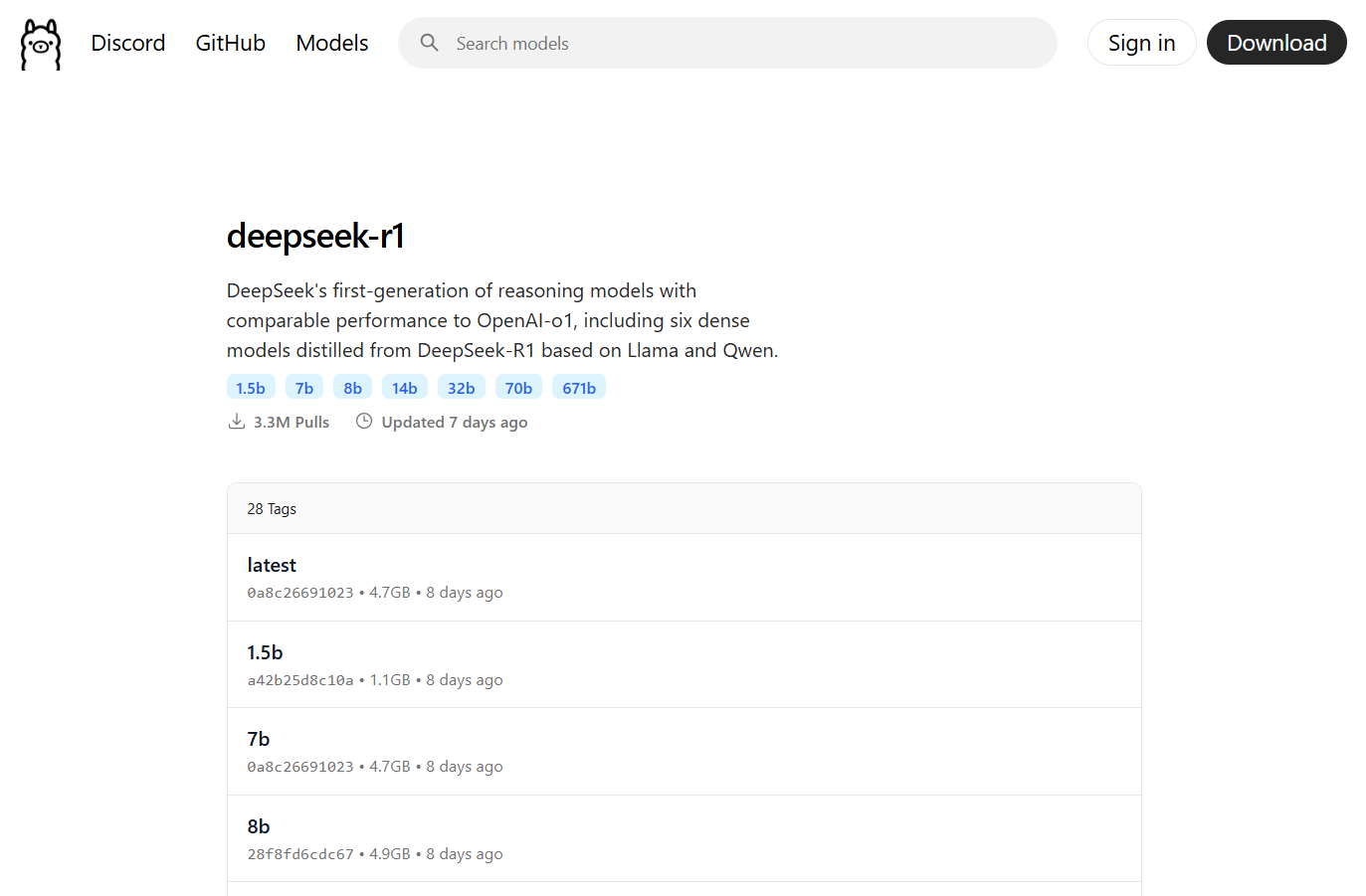

Now we are ready to install the DeepSeek-R1. To install and configure, first check out Ollama models page, where we can find various models which we can use locally. In the page search for DeepSeek-R1, and once we decided which tag we want to use. I am using the 7b version.

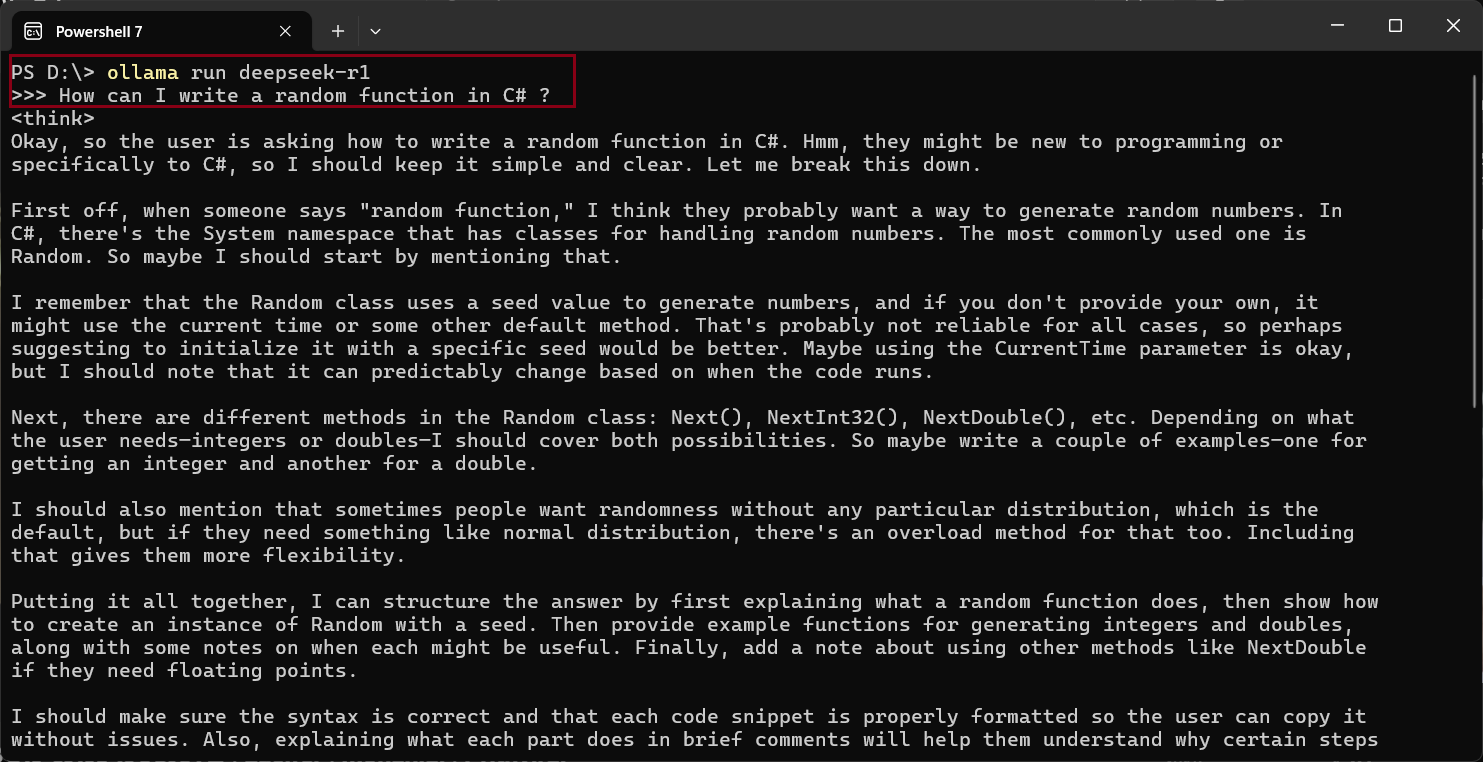

And then we can run the command ollama run deepseek-r1:7b - which will download the model and show a chat interface like this.

We can ask questions and interact with the LLM from this interface. Now we can run the DeepSeek model without worrying about the privacy and data sharing concerns. We can use the Ollama connector in Semantic Kernel and we can use DeepSeek in our C# and .NET applications.

Happy Programming

Found this useful? Share it with your network!

Copyright © 2026 Anuraj. Blog content licensed under the Creative Commons CC BY 2.5 | Unless otherwise stated or granted, code samples licensed under the MIT license. This is a personal blog. The opinions expressed here represent my own and not those of my employer. Powered by Jekyll. Hosted with ❤ by GitHub